ETL is dead; Long live streams

My notes from the webinar: ETL is dead; Long live streams

- Traditional model involved: Operational DB, data warehouse and data flowing from operational to the warehouse no more than a few times a day

- Challenges of traditional approach

- Single server DBs being replaced by distributed data platforms

- More data sources, ex: logs, sensors, metrics, not just relational data

- Faster data processing is needed

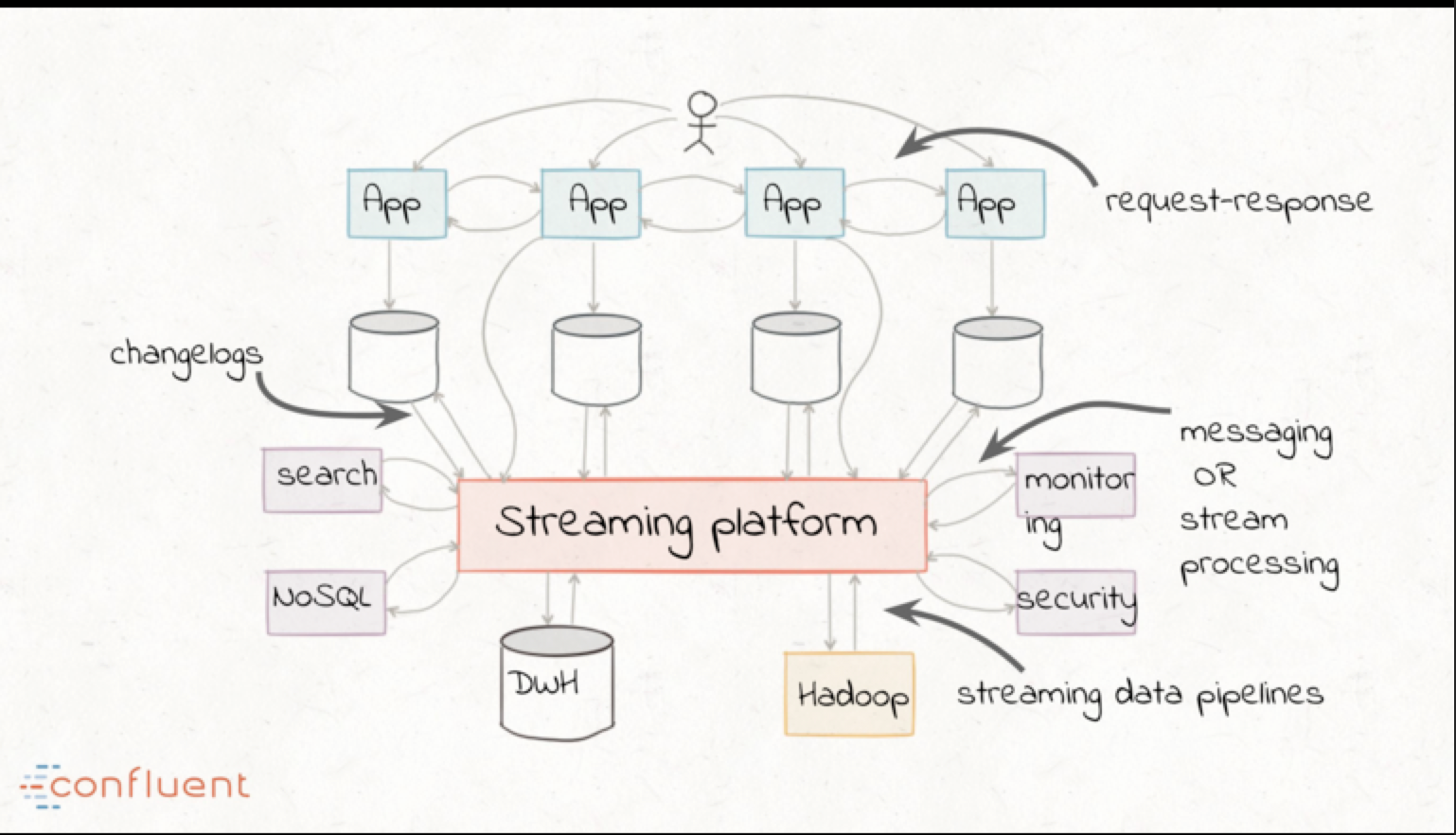

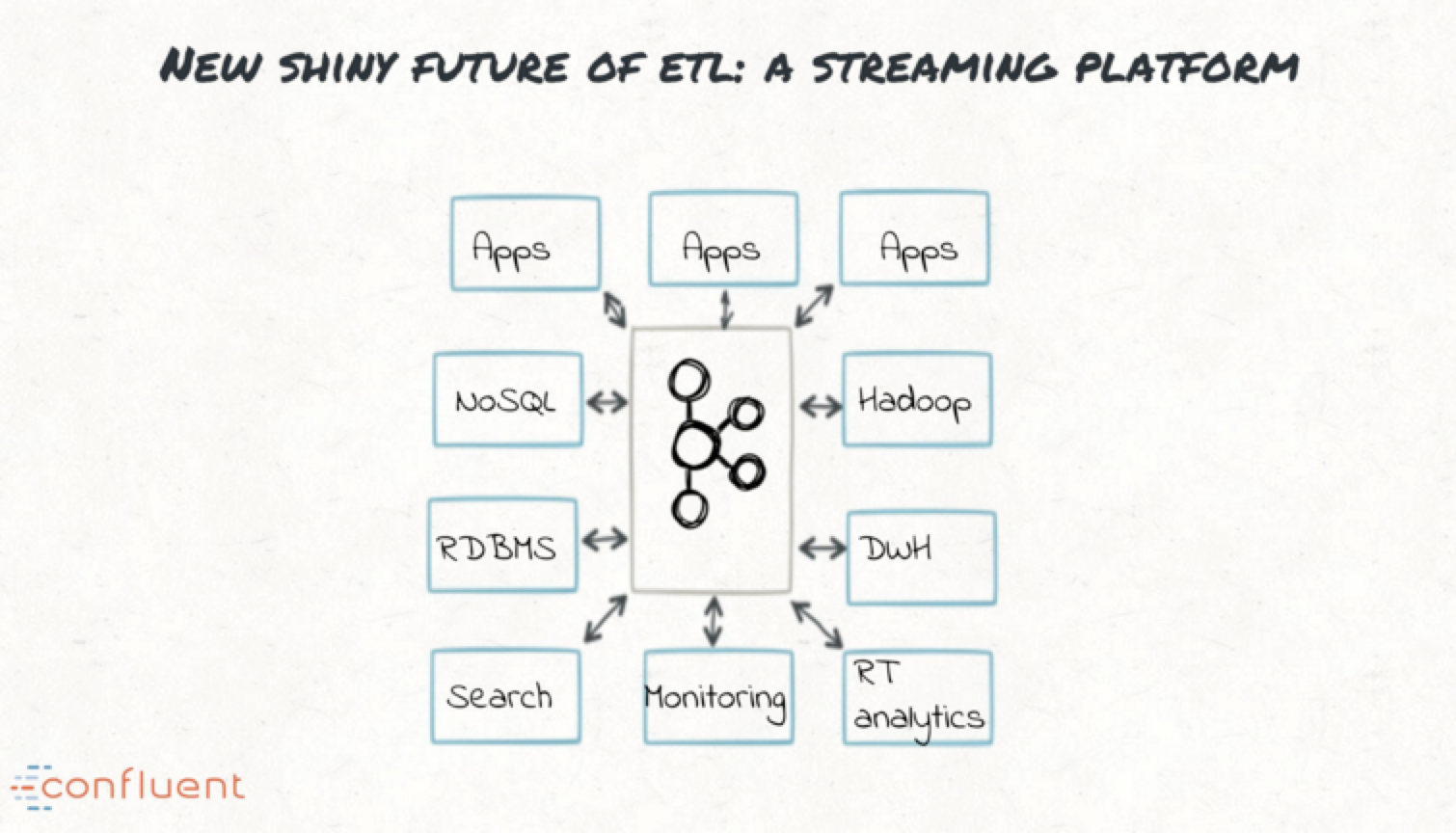

- Vision architecture:

- Traditional ETL drawbacks

- Need to a global schema

- Data cleansing and curation is manual and error-prone

- Operationally expensive

- Batch processing paradigm

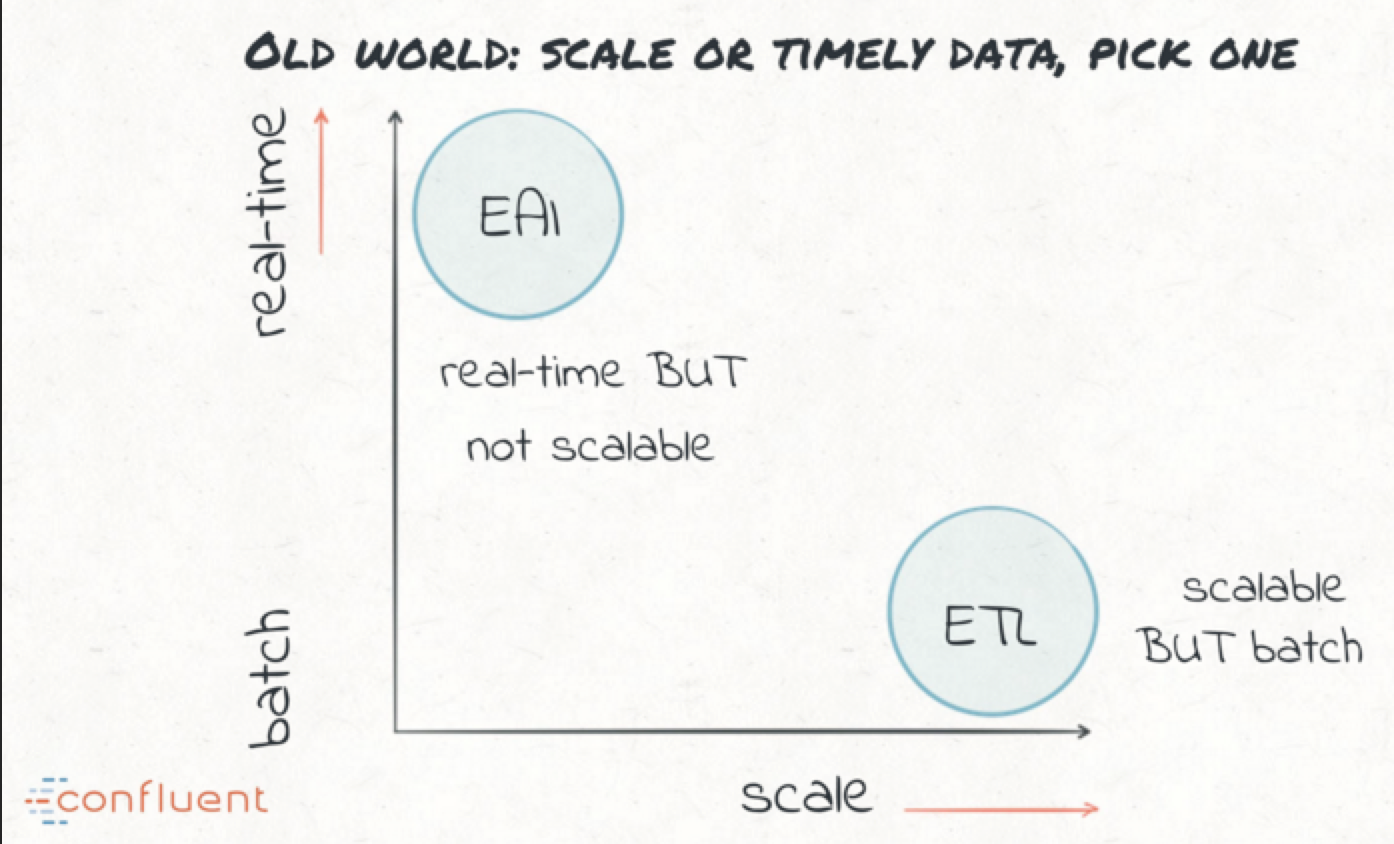

- Early take on real-tome ETL = Enterprise Application Integration (EAI), involved

- ESBs

- MQs

- but they didn’t scale

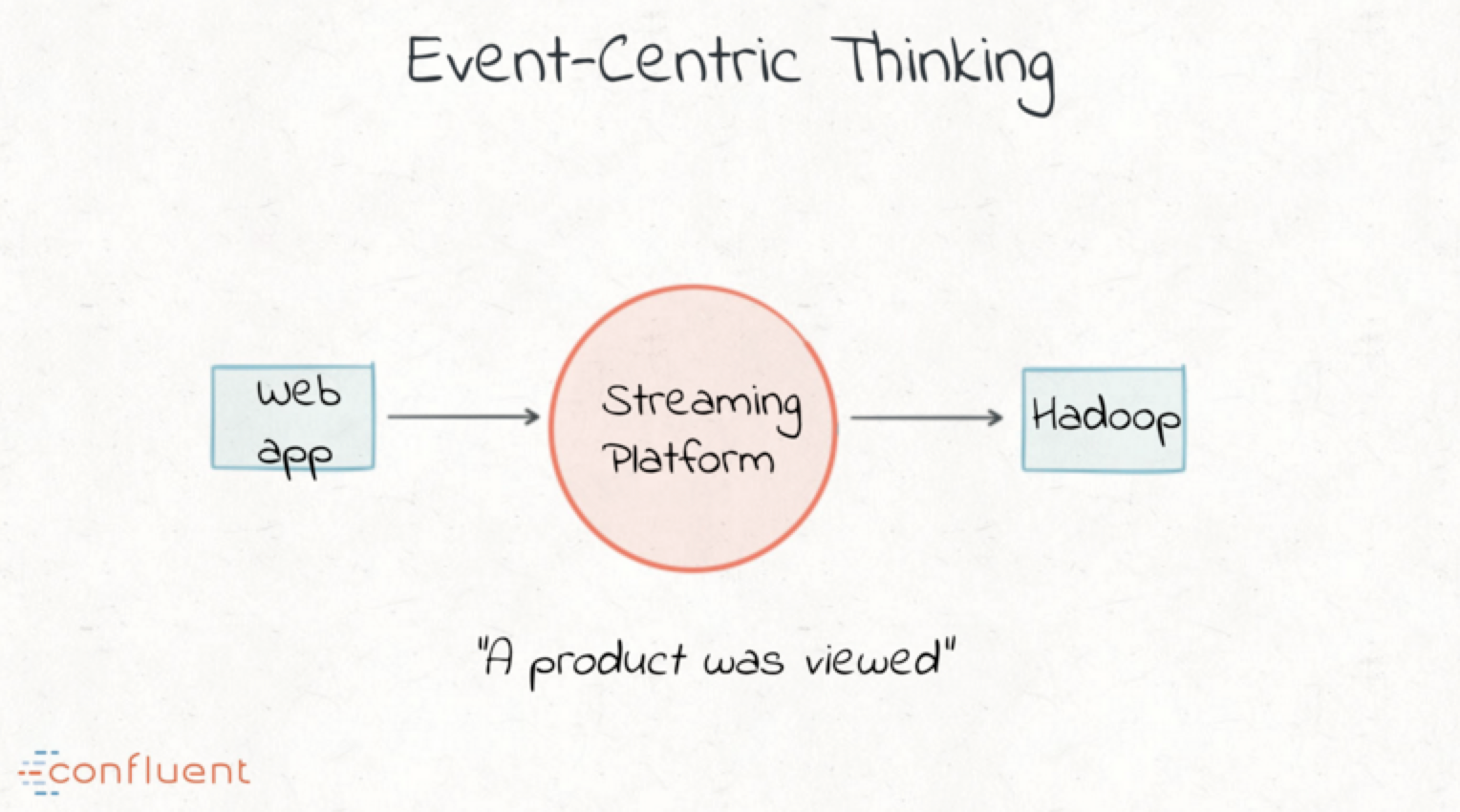

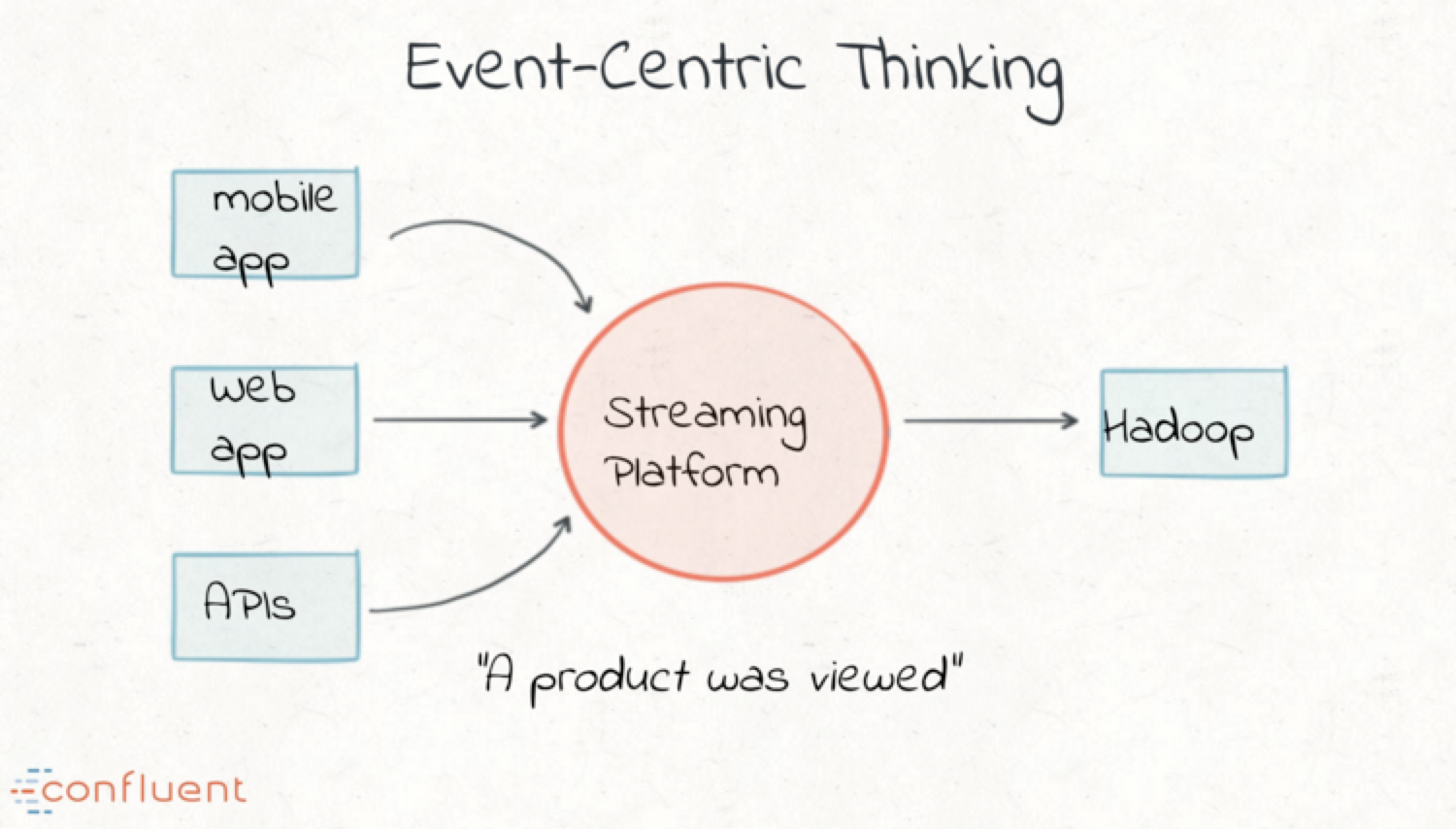

- Event centric thinking

- Decoupling via a pub-sub model, brings isolation across publishers and subscribers

- Forward compatible data architecture ability to add mode applications that need to process the same data, but differently

- Modern streaming approach

- Apache Kafka

- Open source distributed streaming platform

- Log abstraction - append only, multi-offset per reader/subscriber

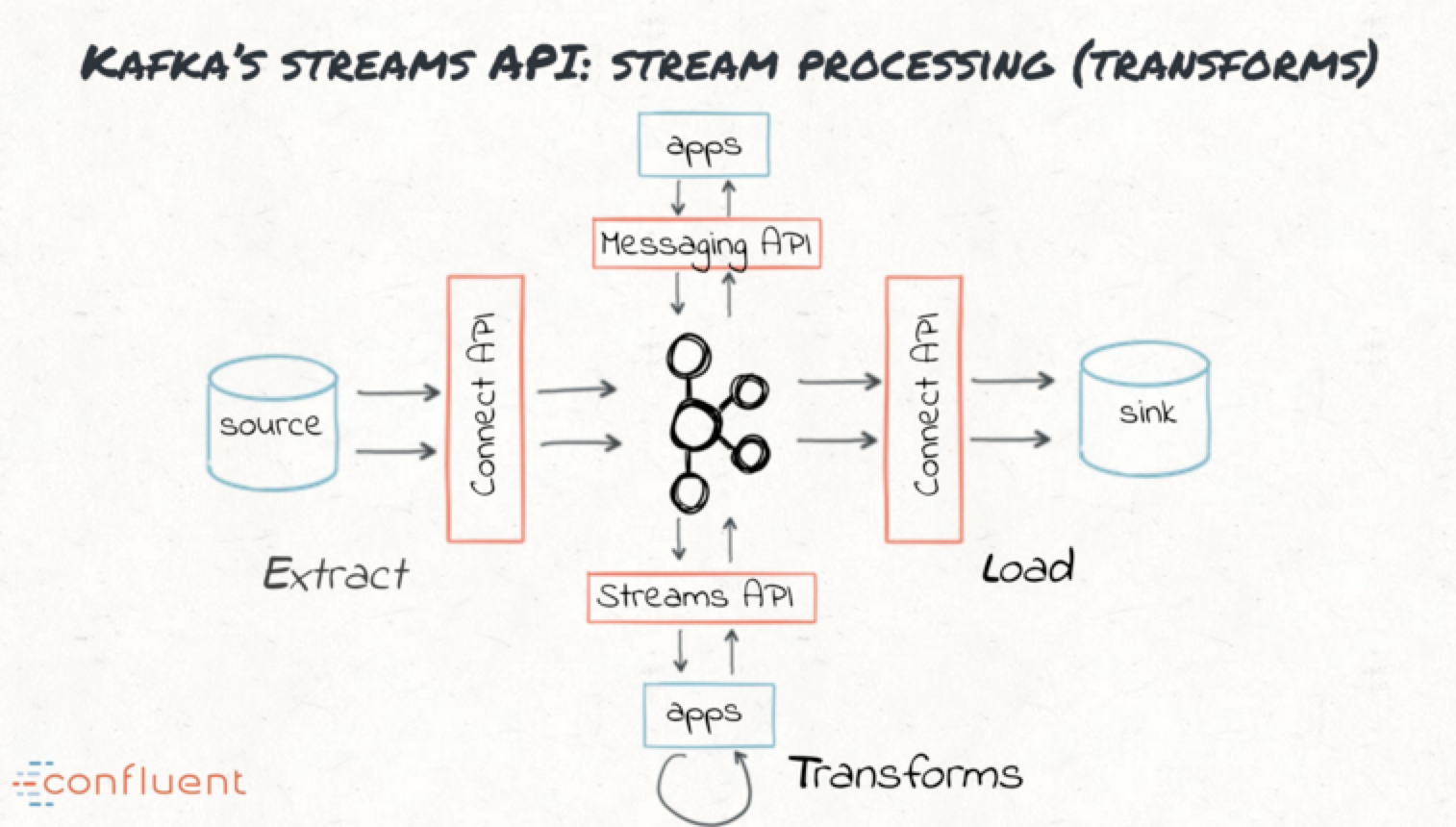

- Messaging APIs

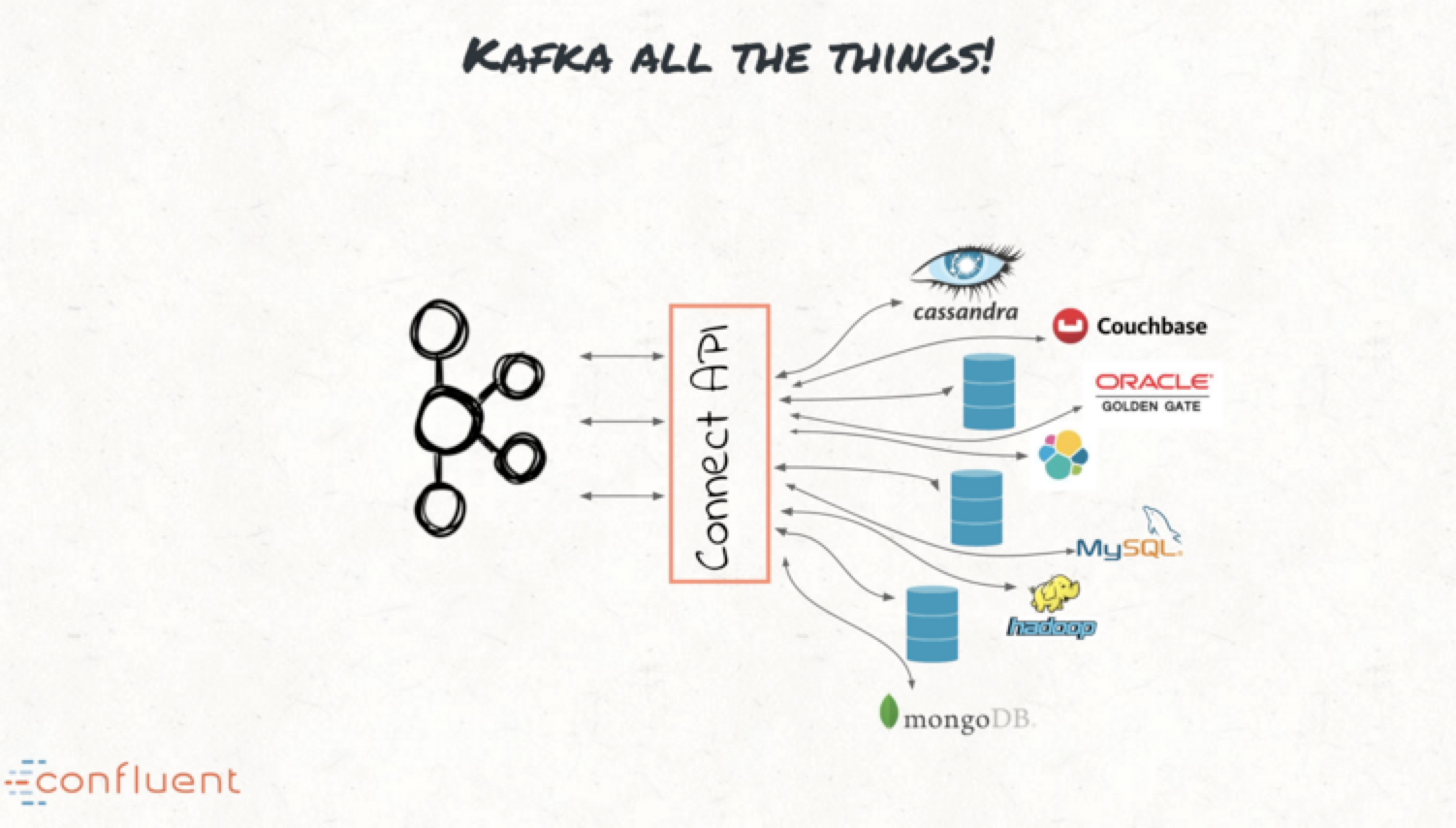

- Connect APIs: E & L of ETL

- Sources and Sink

- Streams API: T of ETL

- A java library